Page contents

TogglePrompt engineering techniques

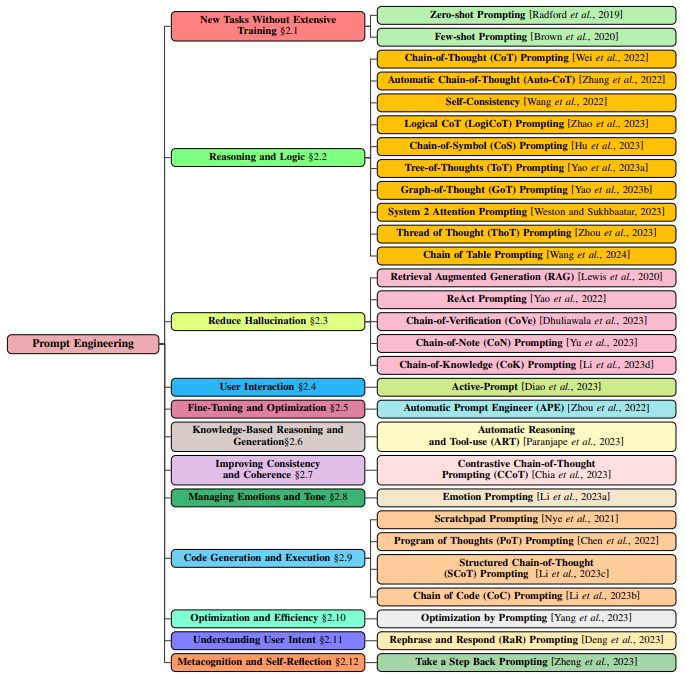

Prompt engineering has emerged as a key technique for improving the capabilities of pre-trained large-scale language models (LLMs) and visual language models (VLMs). It involves strategically designing task-specific instructions, called prompts, to guide the model’s output without changing parameters. By providing a mechanism to fine-tune model outputs through carefully crafted instructions, prompt engineering enables these models to excel across diverse tasks and domains. This adaptability is different from traditional paradigms, where model retraining or extensive fine-tuning is often required for task-specific performance.

Sources:

https://arxiv.org/pdf/2402.07927

https://arxiv.org/pdf/2310.14735

Basic elements of GPT

When GPT-4 receives an input prompt, the input text is first converted into tokens that the model can interpret and process. These tokens are then handled by transformation layers, which capture their relationships and context. Within these layers, attention mechanisms distribute different weights to tokens based on their relevance and context. After attention processing, the model forms its internal renderings of the input data, called intermediate representations. These representations are then decoded into human-readable text.

There method The basic principle of prompting is to “be clear and specific.” This involves formulating unambiguous and specific prompts, which can guide the model towards generating the result wish.

Role prompting is another fundamental method of prompt engineering. It involves giving the model a specific role to play, such as that of a helpful assistant or a knowledgeable expert. This method can be particularly effective in guiding the model's responses and ensuring that they match the desired outcome.

In prompt engineering, triple quotes are a technique used to separate different parts of a prompt or to encapsulate multi-line strings. This technique is especially useful when dealing with complex prompts that include multiple components or when the prompt itself contains quotes, which helps the model better understand the instructions.

Due to the non-deterministic nature of LLMs, it is often beneficial to try multiple times when generating answers. This technique, often called “resampling,” involves running the model multiple times with the same prompt and selecting the best result. This approach can help overcome the inherent variability in model answers and increase the chances of obtaining a high-quality result.

GPT plugins

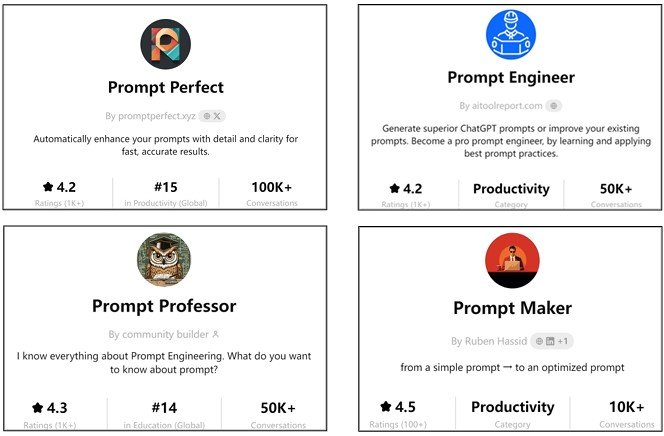

Before ending this discussion on prompt optimization techniques, we should mention the use of external prompt engineering assistants that have been developed recently and show promising potential. Unlike the methods presented previously, these instruments can help us refine the prompt directly. They are able to analyze user inputs and then produce relevant results in a context defined by itself, thus amplifying the effectiveness of the prompts. Some of the plugins provided by the OpenAI GPT store are good examples of such tools.

Such integration can impact how LLMs interpret and respond to prompts, illustrating a link between prompt engineering and plugins. Plugins alleviate the laborious nature of complex prompt engineering, allowing the model to more efficiently understand or respond to user requests without requiring overly detailed prompts. Therefore, plugins can enhance the efficiency of prompt engineering while promoting improved user-centric effectiveness.

These package-like tools can be seamlessly integrated into Python and invoked directly. For example, the “Prompt Enhancer” plugin developed by AISEO can be invoked by starting the prompt with the word “AISEO” to allow the AISEO prompt generator to automatically enhance the provided LLM prompt.

Similarly, another plugin called "Prompt Perfect", can be used by starting the prompt with "perfect" to automatically improve the prompt, aiming for the "perfect" prompt for the task at hand.

However, while using plugins to enhance prompts is simple and convenient, it is not always clear which prompt engineering technique, or combination of techniques, is implemented by a given plugin, given the closed nature of most plugins.

How LLMs work

ChatGPT, a member of the GPT family, is built on a transformer architecture, which is at the heart of how it processes and generates answers. Transformers use a series of attention mechanisms to evaluate the importance of different parts of the input text, ensuring that the most relevant information is prioritized when generating an output.

The architecture consists of encoders and decoders. Encoders process the input data and capture contextual relationships between words, while decoders use this context to generate a coherent output. Self-attention is a key component, allowing the model to focus on different parts of the text based on their relevance to the task at hand. In the case of ChatGPT, once a prompt is submitted, the input text is divided into smaller units called tokens. These tokens are processed through multiple layers, which analyze their relationships to generate a final answer.

Additionally, ChatGPT is refined with Reinforcement Learning from Human Feedback (RLHF), where human feedback is incorporated to improve the quality of the model’s responses. This process helps further align the model with user preferences and needs, ensuring that it generates results that are not only accurate, but also contextually relevant and consistent.

By combining transformer architecture with rapid engineering, ChatGPT excels at a variety of tasks, from casual conversation to specialized problem solving, highlighting the importance of rapid engineering to maximize the capabilities of LLMs.

Elements of Prompt Engineering

At the heart of how ChatGPT and other transformer-based models work is tokenization. Tokenization involves breaking down the input text into smaller units called tokens, which can be words, subwords, or even individual characters. These tokens serve as the basic building blocks for input processing, allowing the model to analyze patterns and relationships within the data. The tokens are then processed through the transformer’s attention mechanisms.

The self-attention mechanism is essential for understanding relationships between tokens. It allows the model to prioritize certain parts of the input based on their importance relative to the overall context. For example, in a prompt about climate change, the model would pay more attention to tokens related to the topic (e.g., “global warming,” “carbon emissions”) while paying less attention to less relevant words (e.g., “a,” “the”).

This self-attention mechanism allows ChatGPT to generate responses that accurately reflect the context of the prompt. By understanding how different parts of the input text relate to each other, the model can capture complex relationships, such as cause and effect, and generate logical and coherent responses.

GPT-4 processes prompts in multiple steps to generate relevant and accurate results. Here’s a simplified description of how it works:

- Input tokenization: When a user provides a prompt, GPT-4 segments the text into smaller units that can be interpreted numerically. This process allows the model to analyze the text in a structured manner.

- Self-attention and contextual encoding: Tokens pass through multiple layers of self-attention mechanisms. Each layer captures relationships between tokens at different levels of abstraction, helping the model understand both the local and global context of the input.

- Intermediate representations: As tokens move through the layers of the model, GPT-4 generates intermediate representations that encode the meaning and relationships between tokens. These representations evolve as they move through successive layers, gradually building a deeper understanding of the prompt.

- Decoding and Output Generation: Finally, GPT-4 generates output by predicting the next token in the sequence, one step at a time. The model builds on its acquired knowledge to generate consistent and contextually relevant responses, ensuring that each token is influenced by the tokens that preceded it.

This process allows GPT-4 to excel at tasks such as answering questions, perform logical reasoning, and generate detailed, human-like text. Refining inputs through attention and layered processing is critical to the model's ability to provide accurate and relevant outputs.

Creating effective prompts is essential to ensuring that ChatGPT provides high-quality responses. There are several key techniques for designing prompts:

Clarity and specificity: The clearer and more specific a prompt is, the better the model can understand and respond appropriately. Ambiguous or overly broad prompts often result in vague or irrelevant responses. For example, instead of asking “Tell me about the technology,” a more specific prompt like “Explain how blockchain technology is used in finance” will generate a more focused and informative response.

Role Invitation: Assigning a specific role to ChatGPT in the prompt can significantly improve the relevance and quality of its response. For example, specifying “You are an expert historian” in the prompt will cause the model to generate a more detailed and contextually rich response with historical information. Role prompting is particularly useful for specialized tasks, such as providing technical explanations or simulating professional roles (e.g., doctor, teacher, software engineer).

Effective prompt design also involves structuring inputs in ways that help the model generate more accurate and nuanced responses. Here are some strategies:

- Contextual inputs: Adding relevant context to the prompt can help guide the model towards a more specific answer. For example, instead of simply asking “How does climate change affect agriculture?”, adding context such as “In the context of developing countries, how does climate change affect agricultural production?” ensures a more targeted and detailed answer.

- Layering information: When processing complex queries, structuring the prompt into sections can improve the model output. For example, a prompt such as “Explain the main factors driving inflation, with a focus on monetary policy, supply chain disruptions, and labor market trends” ensures that the model addresses each component in a structured and logical way.

- Iterative prompts: For tasks that require refinement, using iterative prompts can lead to better results. Users can refine the prompt based on previous model output, guiding it step-by-step through complex explanations or problem-solving tasks. This approach is particularly useful for multi-step reasoning or technical tasks.

By using these techniques, users can significantly improve the quality of ChatGPT results, making the responses more tailored to their specific goals.

Performance optimization

Evaluating the results of advanced incentive techniques is essential to ensure that the results generated by the model are accurate, reliable, and useful for the task at hand. Several evaluation methods can be applied depending on the nature of the task. research :

Accuracy and relevance : For factual tasks, such as synthesizing research or answering specific questions, the accuracy of the results should be checked against reliable sources. This is especially important for zero- or few-trial tasks, where the model relies heavily on pre-existing knowledge.

Logical consistency : When using the chain of thought or self-consistency prompt, it is important to assess the logical flow of the reasoning. Does the result make sense step by step? For research tasks that involve multiple-step reasoning, make sure that each step is valid and contributes to the conclusion global.

Consistency : In the self-consistency prompt, assess the consistency of multiple model results. If multiple responses converge to the same answer, this increases confidence in the accuracy of the result. If there is significant variation, it may be necessary to refine the prompt or task.

Completeness : Especially in the case of a CoT prompt, make sure that the model addresses all parts of the task. For example, if the prompt asks the model to explain both natural and human drivers of climate change, the answer should cover both aspects comprehensively.

Bias and objectivity : assess whether the model has introduced bias into its responses, especially for sensitive research topics. The model should remain objective and refrain from giving biased opinions unless the task specifically requires subjective input.

User testing : For tasks requiring complex reasoning, such as solving mathematical or scientific problems, results can be evaluated by subject matter experts or through user studies to assess their validity and usability.

By applying these evaluation methods, users can ensure that advanced prompting techniques produce reliable and high-quality results for various research tasks.

Optimizing prompt performance in large language models (LLMs) like GPT-4 is critical to ensuring that answers are accurate, relevant, and consistent with user expectations. By adjusting how the model interprets inputs and generates outputs, users can exercise greater control over the quality of answers. Two key strategies for optimizing prompt performance include: temperature And sampling and the use of contextual information.

Temperature : This parameter controls the randomness of the model output. The scale typically ranges from 0 to 1.

Lower temperatures (closer to 0) produce more targeted and deterministic outputs. The model is more likely to choose the most likely next word, leading to accurate and consistent answers. This is ideal for factual, technical, or formal writing where accuracy and clarity are essential.

Higher temperatures (closer to 1) introduce more diversity and randomness into the output. The model is less constrained by probability, which encourages creativity and variability in responses. This setting works well for creative tasks, such as story generation, brainstorming, or open-ended questions.

Example :

Prompt: “Write a formal explanation of quantum mechanics.”

With temperature set to 0.2, the model will provide a clear and accurate explanation based on high probability tokens.

With a temperature of 0.8, the response may include more varied, potentially creative or unconventional explanations that could be useful for exploring different perspectives.

Top-p (kernel) sampling: In Top-p sampling, the model selects the highest percentage of likely next words rather than strictly following the highest probability path. The model continues sampling until the cumulative probability reaches a user-defined threshold (p).

Lower values of p (e.g., 0.5) lead to more conservative responses, while higher values (e.g., 0.9) introduce more variety into the output.

Example :

Prompt: “Describe potential future applications of artificial intelligence in healthcare.”

At a higher p-value of 0.5, the model will provide standard and highly probable responses, such as improvements in diagnostic tools and personalized treatments.

With a higher p-value of 0.9, the model will include more diverse and possibly speculative responses, such as AI advances in emotional support for patients or new applications in telemedicine.

In many cases, the performance of a prompt can be significantly improved by integrating a context additional input. This technique guides the model more effectively by providing it with relevant background information or framing the prompt in a specific way that leads to more targeted and accurate results.

Contextual prompts are designed to include external data, background knowledge, or specific instructions that help guide the model toward a more specific answer. For example, instead of asking a general question like “How important is renewable energy?”, incorporating context into the prompt, such as a geographic focus or a specific time period, can lead to more targeted and relevant answers.

Example :

Prompt: “According to the IPCC 2022 report, explain how renewable energy contributes to reducing carbon emissions in developing countries.”

The inclusion of the IPCC report in the prompt restricts the scope of the model, causing it to rely on specific knowledge about carbon emissions and renewable energy trends in developing countries.

By incorporating such contextual clues, users can tailor responses to their specific needs, making the model more responsive to complex or specialized requests.

Another effective strategy is to assign roles to the model, in combination with built-in context. For example, asking ChatGPT to “act as a climate scientist” when answering a question about renewable energy ensures that the result is framed with the appropriate expertise and guidance.

Example :

Prompt: “As a climate scientist, explain the long-term environmental benefits of wind energy in Europe, focusing on the last decade.”

The role-based prompt gives the model clear guidance on the expertise expected in the response, while the built-in context focuses it on a specific geographic area and time period.