Page contents

ToggleLeast-to-Most Prompting, divide and conquer

Least-to-most prompting can be combined with other prompting techniques such as thought chain and self-consistency. For some tasks, the two steps of least-to-most prompting can be merged to form a single-pass prompt.

Break down the initial problem

Here is a practical example:

CUSTOMER INQUIRY: I just bought a T-shirt from your Arnold collection on March 1st. I saw that it was on discount, so bought a shirt that was originally $30, and got 40% off. I saw that you have a new discount for shirts at 50%. I'm wondering if I can return the shirt and have enough store credit to buy two of your shirts? INSTRUCTIONS: You are a customer service agent tasked with kindly responding to customer inquiries. Returns are allowed within 30 days. Today's date is March 29th. There is currently a 50% discount on all shirts. Shirt prices range from $18-$100 at your store. Do not make up any information about discount policies. Determine if the customer is within the 30-day return window.

It returns the wrong response as shown below, when asked directly:

The simplest to fastest approach is followed and illustrated in the OpenAI playground.

The sequence followed consists of first asking the model (for reasons of consistency, we use several prompts) the question next:

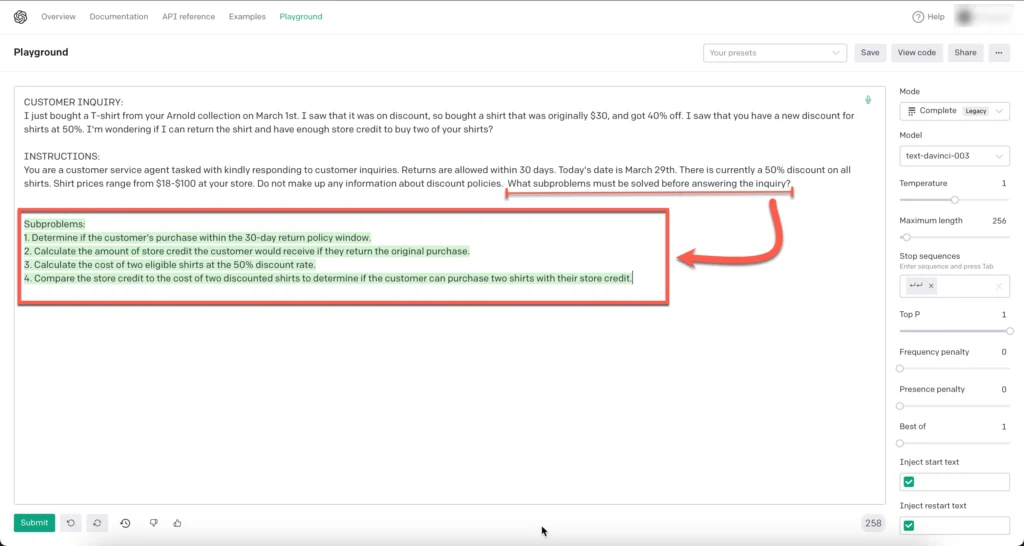

What subproblems must be solved before answering the inquiry?

Subsequently, the LLM generates 4 subproblems that must be solved for the instruction to be completed.

Break down the problem into steps

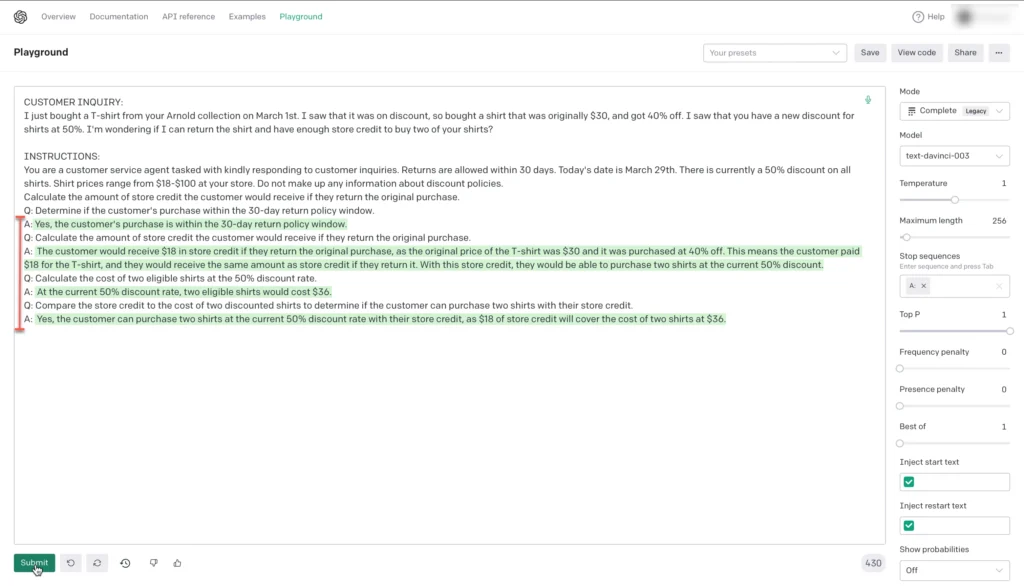

Following the least-to-most approach, the LLM is asked to answer the four sub-problems or tasks that he has identified.

However, the subproblems are asked one at a time, and the previous problem and answer are included in the prompt. Therefore, the prompt is built on one question/answer or subproblem at a time.

The previous prompts are included for reference and serve a few-step training purpose.

The scenario presented to the LLM may be considered ambiguous, but the LLM does a remarkable job of following the sequence and arriving at the correct answer.

It is also possible to break down each step into a separate prompt. This will allow the LLM to provide more explanation of their response process.